Social proof manipulation

Creating artificial or selectively amplified signals—likes, follows, reviews, or comments—to manufacture the appearance of popularity, trustworthiness, or momentum.

Overview

Social proof manipulation creates the illusion of popularity, trust, or momentum. When people see a product, service, or idea receiving visible support—likes, follows, reviews, comments—they assume it’s genuinely valued.

Social proof distortion takes three primary forms:

-

Psychological engineering in "legitimate" marketing - Leveraging real social proof tools—testimonials, reviews, trust badges — sometimes streached into grey tactics like review gating, cherry-picked endorsements, and fake scarcity.

-

Bot-based engagement manipulation - Fake likes, followers, reviews, and comments generated through bot farms, click farms, and automation scripts.

-

platform-controlled algorithmic steering - Boosting or suppressing content based on selective amplification signals—rigging visibility without needing bots.

Psychological triggers behind social proof manipulation

Social proof manipulation doesn't just fabricate popularity — it strategically exploits built-in psychological biases to drive behavior without resistance:

-

Similarity bias - We're more influenced by people who seem like us. Carefully targeted fake reviews, endorsements, or interactions simulate relatable peer validation.

-

Conformity drive - Humans instinctively align with perceived group behavior. Manufactured engagement creates visible "social norms," pressuring others to follow.

-

Cognitive efficiency - People seek cognitive shortcuts to avoid mental overload. Visible popularity ("100k likes can't be wrong") short-circuits deeper evaluation.

-

Bandwagon effect - Individuals imitate perceived majority actions. Early fake momentum triggers real user engagement cascades.

-

Authority bias - Endorsements or interactions from high-status or high-follower accounts are trusted automatically, without critical scrutiny.

-

Herd behavior - People mirror collective actions under the belief that group behavior is safer or more accurate — even when the group is artificially engineered.

When social proof is manipulated at scale, distinguishing between genuine popularity and manufactured visibility collapses. Businesses make decisions based on distorted data. Public opinion gets skewed by engineered consensus. Advertisers lose budgets to click fraud. Democratic processes become vulnerable to influence operations.

Tactics

"Legitimate" techniques in marketing

Social proof manipulation is common in marketing.

On landing pages and ads

- Numerical proof: “Join 10,000+ businesses that grew leads by 27%.”

- Seller ratings: Embedding 5-star ratings through ad extensions.

- Sector authority: “Trusted by 65% of UK accounting firms.”

Landing page social proof hierarchy

- Primary: Client logos, usage statistics ("500K users").

- Secondary: Named testimonials, star reviews.

- Tertiary: Full case studies.

Other psychological plays

- Trust badges, real-time user notifications ("Jane from London just booked"), "As seen in" media mentions.

- Timed placements (testimonials near forms, stats above the fold).

- Emphasizing similarity (targeted demographic testimonials).

Abuse cases

- Review gating: Only asking satisfied customers for reviews.

- Cherry-picking testimonials: Highlighting only the top 1% feedback.

- Fake scarcity claims: "Only 3 left!" when fully stocked.

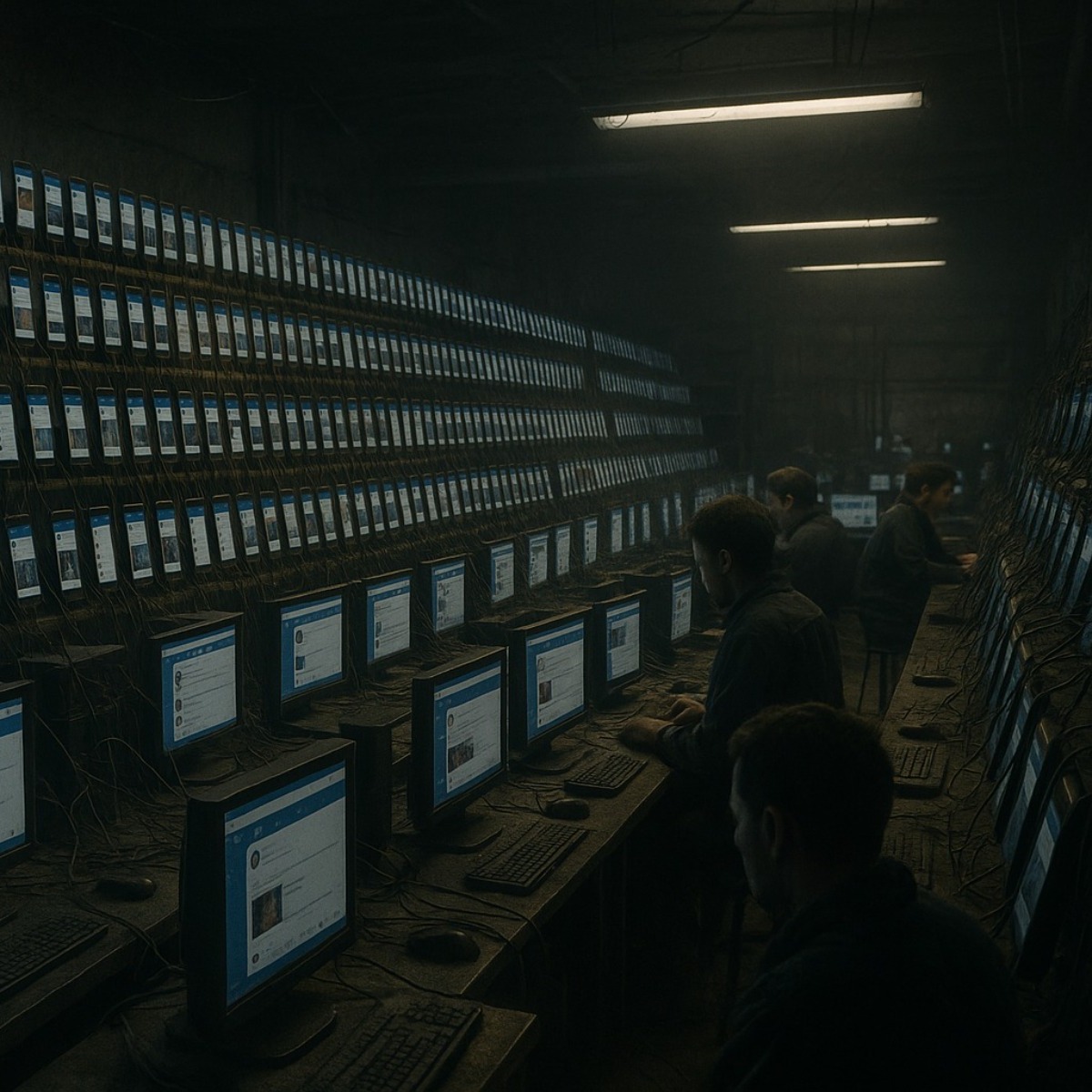

Bot-Driven Social Proof

Artificial social proof created through human labor, automated networks, or hybrid services to simulate credibility, momentum, or popularity at scale.

Source of manipulation

-

Click farms - Human workers manually liking, following, commenting, or viewing. Operate in low-cost labor markets.

-

Bot farms - Automated bots executing human-like behavior through tools like Selenium, Puppeteer, or Playwright.

-

Engagement marketplaces - Underground or gray-market platforms selling fake engagement packages. Often operating on Telegram and offering a blend of bots + click farm labor for “natural-looking” interaction patterns.

Common methods

-

AI-Generated reviews, comments, and accounts - Use of GPT-based models to mass-produce unique or paraphrased reviews, bios, and posts.

-

Emotional hooks embedded (e.g., urgency, happiness, anger) to trigger higher trust and conversions.

-

Algorithmic exploits

-

- Drip feeding - Gradual, time-controlled injection of fake engagement to simulate organic growth (e.g., 30 new followers per day instead of all at once).

-

- Early spike tactics - Front-loading fake engagement immediately after posting to manipulate platform feed algorithms that prioritize early velocity.

-

- Use randomized actions, proxy rotation, device emulation, and aged accounts to avoid detection.

Platform-Driven Social Proof

When platforms themselves manipulate visibility based on who interacts — not through direct fraud but algorithmic engineering:

- Platform owners or high reach users boost or suppress content.

- Algorithms simulate popularity without traditional censorship—via feed ranking, deprioritization, muting effects.

Case study: Manufactured consensus on x.com (2025)

For further exploration of the technical details, see these articles:

-

Joint Cybersecurity Advisory, State-Sponsored Russian Media Leverages Meliorator Software for Foreign Malign Influence Activity (2024) - It details the Meliorator system’s structure — including Brigadir, Taras, bot archetypes ("souls"), automated action systems ("thoughts"), and how operators bypass platform defenses.

-

Sam Sundar, Mastering Twitter(X) Automation: A Guide to Python Bots and Cron Jobs (2024) - note: the use of bots on X is legal if they clearly identify as bots and respect interaction rules.

-

Muhammad Nazam, Mastering Instagram Automation: Create Your Own Python Bot in Easy Steps (2024) - note: While the author suggests bots can be used "ethically" with a balance of human interaction, Instagram’s Terms of Use prohibit inauthentic behavior, including using automation tools to artificially boost engagement, risking penalties like account suspension.

Legal Considerations & Compliance

Fake engagement often crosses legal lines. In many jurisdictions (e.g., U.S., EU), misrepresenting endorsements or popularity can violate consumer protection laws, advertising standards, and anti-fraud regulations. Platforms like Instagram, Facebook, ban such practices under their Terms of Service.

Brands must ensure engagement is real and verifiable to comply with consumer protection laws (FTC, EU directives). Failure to disclose paid endorsements or using fake reviews can trigger platform bans, regulatory fines, and legal actions from competitors.

Further Reading

-

NATO Strategic Communications Centre of Excellence, Social Media Manipulation for Sale: Experiment on Platform Capabilities to Detect and Counter Inauthentic Social Media Engagement (2024) - social media platforms still fail to counter cheap, fast bot-driven manipulation, now used for both spam and political influence. Small-scale disinformation remains largely undetected, transparency is poor, and the report calls for stricter regulation, better research collaboration, and a focus on both amplification and source content like ads.

-

Interpol, Beyond illusion (2024) - Provides a balanced analysis of synthetic media applications and challenges, including practical examples beyond just text generation.

-

Darren Linvill et al., Digital Yard Signs: Analysis of an AI Bot Political Influence Campaign on X (2024) - Detailed analysis of an AI-driven bot network of over 600 accounts targeting U.S. political conversations ahead of the 2024 elections.

-

David Nevado-Catalán et al., An analysis of fake social media engagement services (2023) - Analysis of the fake engagement market across Social Media Marketing panels and underground forums

-

Blaž Rodič, Social Media Bot Detection Research: Review of Literature (2025) - The paper reviews methods, challenges, and trends in social media bot detection.

-

Jaiv Doshi et al., Sleeper Social Bots: A New Generation of AI Disinformation Bots are Already a Political Threat (2024) - Paper on how advanced AI bots ("sleeper bots") are evolving into a major political disinformation threat.

In "legitimate" marketing tactics:

- Sean Park et al., The Effects of Social Proof Marketing Tactics on Nudging Consumer Purchase (2023) - Paper finds that positive reviews significantly boost purchase likelihood, while pop-ups have little effect and can even dilute review impact; adolescents, driven by conformity and fear of missing out, are highly vulnerable to credibility-based social proof in e-commerce.